The goal of this project was to predict the wind speeds at downstream wind turbines for some future time horizon, given data describing the wind speeds, wind directions, and turbine orientations for some historic time horizon. To this end, several variations of the recurrent neural network (RNN) architecture were implemented and tested: including models that predicted the change in wind speed from the most recent values (residual) vs. models that predicted just the wind speed (absolute), models that predicted values for several future time-steps in a single prediction (multi-step) vs. models that predicted values for several future time-steps by feeding predictions back into the model (autoregressive).

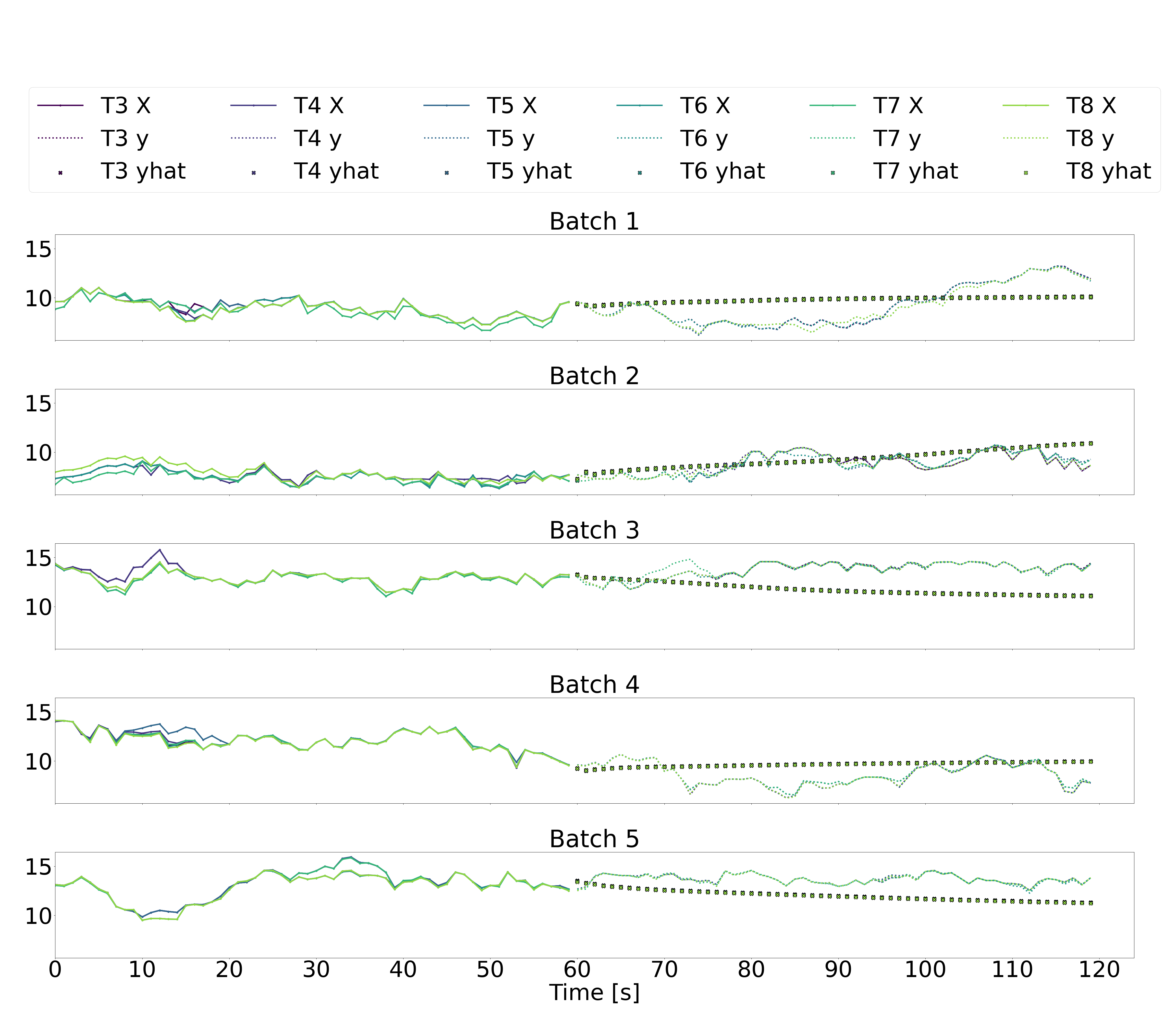

While none of the methods produced unreasonable results i.e. non-physical wind speeds, it is unfortunately the case that none of the methods resulted in accurate results, at least when we look at the time-series of the predicted values overlaid with the true values, take the following one as an example:

To varying degrees, the autoregressive models tested approximately extrapolate from the most recent value of the input, and then settle to a constant prediction after several time-steps. The multi-step models result in noisy, highly variable predictions. It may be the case that the choice of input time-window length has the effect of neglecting the variation of the inputs with time, or that the parameterization of the recurrent neural networks is ‘forgetting’ all data that was received before the most recent one. In any case, further tuning and adjustment of the architectures is surely needed to improve performance, with a particular focus on the autoregressive architectures, which generate more reasonable and robust predictions than the multi-step variations. The models may also be improved by fine-tuning the definition of the loss functions, to consider either the output at every time-step, or the outputs of a subset of the time-steps

Another point of interest is the discrepancy between the loss function: mean-squared-error, and the metric function of choice: mean absolute percentage error. The former tended to decrease over the course of training and eventually saturated by the third epoch. In the case of the latter however, it tended to decrease gradually until approximately a third of the way through an epoch, after which it increased significantly. More investigation is warranted to understand why these two metrics do not correlate. It may be the case that utliizing the mean absolute percentage error as the loss function would be a better choice.

In conclusion, while these recurrent neural network methods have the capacity to predict the wind speeds at downstream turbines given historic data, their structure and parameterization needs to be fine-tuned if they are to generate predictions that are accurate for more than a single-time step into the future. Once an architecture is found that results in ‘good enough’ (within 10%) predictions over a future time-horizon of several minutes, next steps would be to a) run the learning algorithm in an online fashion such that it is adaptive to changing wind speed/direction trends and to changing wind farm layouts (i.e. one or more turbines can be taken out of operation due to malfunctioning or for maintenance purposes, and this can influence the wind speeds at other downstream turbines), b) to integrate a prediction of the uncertainty associated with the predictions, especially in the latter time-steps of the future time-horizon, and c) to integrate these learned downstream wind speeds with a method for controlling the turbine orientation (the yaw angles) to maximize the wind farm power output.

Additional ideas for improving the predictive performance include:

- Adding recurrent dropout layers or dropout layers between the recurrent layers such that the training data is less likely to be overfit

- Reducing the number of neurone in each layer, i.e. simplifying the model via pruning techniques, until the training error is more satisfactory, before adding complexity again in the form of additional layers or neurons

- Focusing on the autoregressive approach for predicting multiple time-steps ahead, which seems more promising than the multi-step ‘single-prediction’ approach.

- Generating more than 10 hours of data to train and test the models on.

Other ideas for learning strategies include the following:

- Convolutional Neural Networks (CNN) can be combined with recurrent LSTM of GRU cells (known as CNN-LSTM and CNN-GRU, respectively, the latter of which would require a custom

Conv2DGRUCellModelclass andConv2DGRULayerclass) to capture the spatial dependencies of the effective rotor-averaged wind velocities of downstream turbines on the yaw angles and wind field measurements in their neighborhood of upstream turbines. - Learning the parameters of a combination of analytical wind farm wind field models in an online fashion during wind farm operation, that can adapt to turbines temporarily ceasing operation and time-varying wind direction and wind speed.

- Forecasting the prevailing wind direction incident on the wind farm for controls purposes.

Thank you for reading! Anyone with feedback, potential collaborators, and curious hobbyists are welcome to reach out.